This article is regarding bit color for any display. In this article also we will see about how changing bit color will affect the picture color and quality. Also if we change 10 bit to 12 bit why we have noticeable change in color.

So, 10-bit color: It’s important and new, but…what is it? Before we dive into that, the first question is what is bit depth, and why does it matter for displays?

· What Is Bit Depth

In computer programming, variables are stored in different formats with differing amounts of bits (i.e., ones and zeros), depending on how many bits that variable needs. In general, each bit you add allows you to count up to double the previous number and store double the previous amount of information. So if you need to count up to two (excluding zero), you need one bit. Two bits allow you to count up to four, three bits up to eight, and so on.

The other thing to note here is that in general, the fewer bits, the better. Fewer bits mean less information, so whether you’re transmitting data over the internet or throwing it at your computer’s processing capabilities, you get whatever it is you want faster.

However, you need enough bits to actually count up to the highest (or lowest) number you want to reach. Going over your limit is one of the most common software errors. It’s the type of bug that initially caused Gandhi to become a warmongering, nuke-throwing tyrant in Civilization. After his “war” rating tried to go negative, he flipped around to its maximum setting possible. So it’s a balancing act for the number of bits you need; the fewer bits you use, the better, but you should never use less than what’s required.

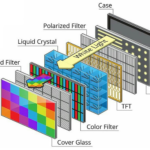

· How Bit Depth Works With Displays

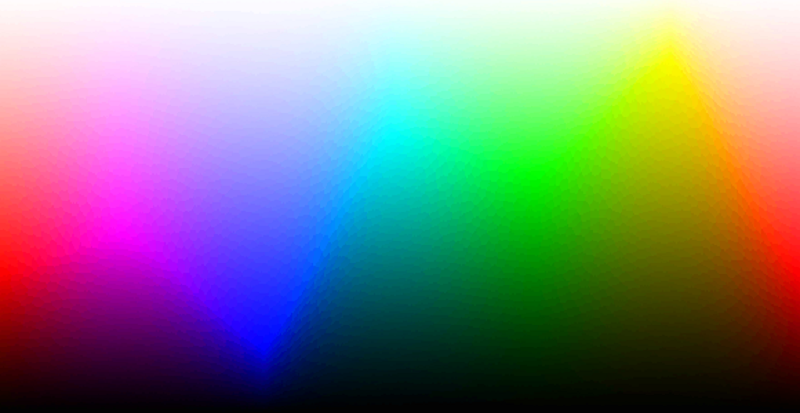

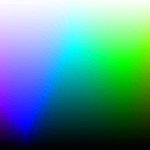

With the image you’re seeing right now, your device is transmitting three different sets of bits per pixel, separated into red, green, and blue colors. The bit depth of these three channels determines how many shades of red, green, and blue your display is receiving, thus limiting how many it can output.

The lowest-end displays (which are entirely uncommon now) have only six bits per color channel. The sRGB standard calls for eight bits per color channel to avoid banding. In order to match that standard, those old six-bit panels use Frame Rate Control to dither over time. This, hopefully, hides the banding.

And what is banding? Banding is a sudden, unwanted jump in color and/or brightness where none is requested. The higher you can count, in this case for outputting shades of red, green, and blue, the more colors you have to choose from, and the less banding you’ll see. Dithering, on the other hand, doesn’t have those in-between colors. Instead, it tries to hide banding by noisily transitioning from one color to another. It’s not as good as a true higher bitrate, but it’s better than nothing.

With today’s HDR displays, you’re asking for many more colors and a much higher range of brightness to be fed to your display. This, in turn, means more bits of information are needed to store all the colors and brightness in between without incurring banding. The question, then, is how many bits do you need for HDR?

Currently, the most commonly used answer comes from the Barten Threshold, proposed in this paper, for how well humans perceive contrast in luminance. After looking it over, Dolby (the developer of how bits apply to luminance in the new HDR standard used by Dolby Vision and HDR10) concluded that 10 bits would have a little bit of noticeable banding, whereas 12 bits wouldn’t have any at all.

This is why HDR10 (and 10+, and any others that come after) has 10 bits per pixel, making the tradeoff between a little banding and faster transmission. The Dolby Vision standard uses 12 bits per pixel, which is designed to ensure the maximum pixel quality even if it uses more bits. This covers the expanded range of luminance (that is, brightness) that HDR can cover, but what about color?

How many bits are needed to cover a “color gamut” (the range of colors a standard can produce) without banding is harder to define. The scientific reasons are numerous, but they all come back to the fact that it’s difficult to accurately measure just how the human eye sees color.

One problem is the way human eyes respond to colors seems to change depending on what kind of test you apply. Human color vision is dependent on “opsins,” which are the color filters your eye uses to see red, green, and blue, respectively. The problem is that different people have somewhat different opsins, meaning people may see the same shade of color differently from one another depending on genetics.

We can make some educated guesses, though. First, based on observations, eight-bit color done in the non-HDR “sRGB” standard and color gamut can almost, but not quite, cover enough colors to avoid banding. If you look closely at a color gradient, assuming you have an eight-bit screen, there’s a decent chance you’ll notice a bit of banding there. Generally, though, it’s good enough that you won’t see it unless you’re really looking for it.

The two HDR gamuts have to cover a huge range of brightness and either the P3 color gamut, which is wider than sRGB, or the even wider BT2020 color gamut. We covered how many bits you need for luminance already, but how many bits do you need for a higher gamut? Well, the P3 gamut is less than double the number of colors in the SRGB gamut, meaning nominally, you need less than one bit to cover it without banding. However, the BT 2020 gamut is a little more than double the sRGB gamut, meaning you need more than one extra bit to cover it without banding.

What this means is that the HDR10 standard, and 10-bit color, does not have enough bit depth to cover both the full HDR luminance range and an expanded color gamut at the same time without banding. Remember, 10-bit color doesn’t quite cover the higher range of brightness by itself, let alone more colors as well. This is part of the reason why HDR10, and 10-bit color (the HLG standard also uses 10 bits) is capped at outputting 1k nits of brightness, maximum, instead of 10k nits of brightness like Dolby Vision. Without pushing the brightness range a lot, you can keep apparent banding to a minimum. In fact, with today’s panels’ limited brightness and color range, which leads to limited brightness and color content, very few people can notice the difference between 12-bit and 10-bit signals.

Note: This is about What is 10-Bit And 12-Bit Color ? you can also read our other pages also.

Also visit below page:

LCD/LED vs QLED vs OLED Difference